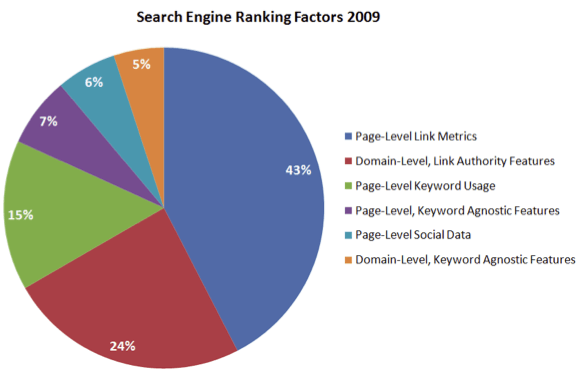

SEOMoz have updated their search engine ranking factors compilation which they publish every 2 years. We think this is essential reading for everyone in digital marketing, not just Sharp SEO Services specialists since it shows how to best get success in SEO and what to target your agency on. In 2009 the top 5 ranking factors were:

- Keyword focussed anchor text from external links

- External link popularity

- Diversity of external link sources

- Keyword use anywhere in title tags

- Trustworthiness of Domain based on Link Distance from Trusted Domains

Here are the key charts of ranking factors from the report [Editors note: I use this to show that page Keywords are relatively unimportant compared to anchor text and domain authority] :

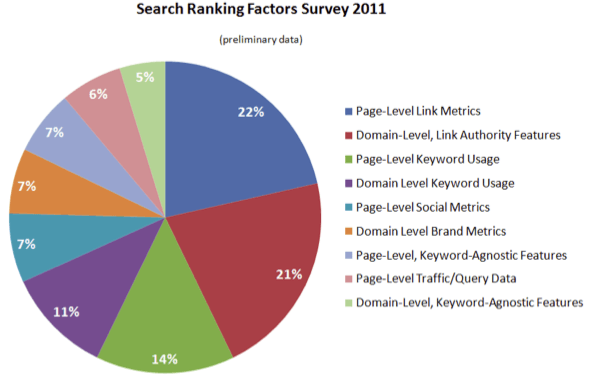

While early days in the research the 2011 update from SEOMOZ is formed from surveying 132 SEO professionals and correlating data from 10,000+ keywords. Checkout slides 12 & 13 on the slideshare presentation below:

The 2011 analysis loses some of the clarity of the 2009 analysis. Key differences to take note of in 2011 are:

- Introduction of page level social factors (is your content shareable & good enough for people to want to share?)

- The overall value of external links has shrunk (though new fields have been added which may skew data)

- Domain level brand metrics are introduced as a key factor'

It's that magical time of year when all of us who foolishly assumed the mantle of clairvoyance last December check up on our abilities and repeat our arrogant presumption again. Not surprisingly, something compels me to try again, despite the odds, but I am feeling a bit whimsical tonight, so let's make a game out of the prediction practice.

For each prediction (mine and others), we can grade using the following points system:

- Spot On (+2) - when a prediction hits the nail on the head and the primary criteria are fulfilled

- Partially Accurate (+1) - predictions that are in the area, but are somewhat different than reality

- Not Completely Wrong (-1) - those that landed near the truth, but couldn't be called "correct" in any real sense

- Off the Mark (-2) - guesses which didn't come close

The rule is - if the score is lower than +1, the blogger/industry leader/author isn't allowed to make predictions for the coming year.

So let's mark up my 8 predictions from last year and see whether new predictions are permitted:

- This Real Time Search Thing is Outta Here (+1) - technically, it's still around, though far less prevalent. I said "In 2010, I think this fades away. Perhaps not entirely, but we won't be seeing it for nearly as many queries with the prevalence we do today," which, on the scoring scale, probably deserves a "partially accurate."

- Twitter's Link Graph is the Real Deal (+2) - my guess seems oddly prescient when compared to Google + Bing's interview a few weeks back. "Google's not going to just take raw number of tweets or re-tweets. I think we're already seeing the relevance and reputation calculations in their decisions of which tweets and sources to show in the real-time results, and I expect that algorithms/metrics like PageRank, TrustRank, etc. will find their way into how Google uses the real-time data." I wonder if my luck can last.

- Personalized Search is Here to Stay (-1) - The title of this guess would make you think I'd got it right, but the substance is lacking. I noted that "If it's proven that you can get organic benefits by attracting PPC clickthrough, this may be the new "paid inclusion" for 2010, and could drive bid prices up massively as companies compete not only for paid listing clicks, but for the chance to earn "organic" positioning as well." Personalization bias didn't go towards brand exposure, and it actually hasn't got much stronger (apart from the localization element, which I didn't predict). Technically, it's still around, but it didn't become the juggernaut I thought it would.

- It's Going to Be a Two-Engine 80/20 World (+2) - Google's market share of web searches sending external traffic is likely very close to this (although Comscore reports only 66%, those numbers are heavily biased due to non-web-search "search" activity counted in the figures). A far better source would be something like StatCounter's referral data from the 15 billion pageviews/month on 3 million+ websites, which reports 81.88% for Google, and ~18% for Bing/Yahoo!. Given that Ask.com, Cuil and Yahoo! all folded their search operations this year, and Facebook/Twitter/Somebody Else Big hasn't entered the field, I'm giving this a "Spot On."

- Site Explorer and Linkdomain Will Disappear (+1) - Linkdomain is gone (at least in the US, and soon in most other countries), but it appears we'll still have until 2012(ish) with Site Explorer, so I'm giving this a (possibly slightly generous) rating of "partially accurate."

- SEO Spending Will Rise Dramatically (-1) - This one depends on the meaning of "dramatically." SEMPO's data suggests that 43% of marketers "expect" to spend more on SEO, but this is down 2% from 2009's survey. SEOmoz's own survey unfortunately doesn't compare apples to apples (we haven't asked the same question multiple years in a row and thus can't compare well). As of now, no new sources have come forward with data we're aware of (Forrester + eMarketer being the usual suspects). Thus, I'll give a "not completely wrong," since we really don't know.

- 2010 is the Year of Conversion Rate Optimization (-1) - Again, I'm going to say this was "not completely wrong" but it's also very tough to measure. We've had more speakers on CRO at search and marketing events of all varieties. Anecdotal reports would indicate CRO is becoming a more common and popular practice for organic marketers, but without solid numbers, it's hard to know. We can presume, however, that if there aren't lots of studies and data reports touting it, this probably wasn't "the year."

- More Queries Will Send Less Traffic (-1) - Given the launch of Google Instant, the personalization and localization of results, increased ranking inconsistency and more universal/vertical results in the SERPs, I'm going to say this is possibly near the mark, but not definitively correct. Google Instant, in particular, appears not to have moved the needle much on search demand and queries sending traffic. In fact, the only reason this is "not completely wrong" is due to my clever non-prediction of how many queries would send how much less traffic. :-)

Tallying the numbers, I'm seeing +6 and -4, for a total of +2, which means new predictions for 2011. I also invite you to analyze some of the many lists of predictions for this past year here. If my calculations are correct, Mashable and TechCrunch are out of the predictions business (the latter just barely) while the New York Times' Bits Blog should continue (though, like me, they made some pretty pansy predictions).

Without further ado, my predictions for SEO in 2011:

#1: Someone Proves (or a Search Engine Confirms) that Clicks/Visits Influence Rankings

I'm taking a chance on this one, but I've been hearing from more and more SEOs that there's some correlation between earning clicks and moving up in the rankings. In 2011, we'll get confirmation, either through testing or an admission from an engine that click-through-rate from the SERPs, visit count outside of search (or diversity of sources), or other usage-based data is in the ranking algorithm (or a method they use to help ID spam).

#2: Google Local/Maps Adds Filters and Sorting

The big reason Yelp is so much better than Google Maps/Local for finding a good local "place" isn't just the reviews (which Google aggregates from Yelp anyway). It's the filters that let me sort by features/pricing/proximity/open status/etc. Google's been playing the silly game of forcing users to choose search queries to enable rough, imperfect filtering, but 2011 is going to see the search engine shift to a model that allows at least some important filters/feature-selection.

#3: Social Search Will Rise

There's power in social media search, and Google/Bing's efforts to date have been lackluster at best. I suspect in 2011, we'll see the nascent beginning of search that leverages Twitter/Facebook/LinkedIn connections to find results from your friends. It's possible this will start niche-based only (search articles your friends have shared, ala Trunk.ly), but it could also be broader - possibly something from Facebook or Twitter themselves.

#4: Rank Tracking Will Be Possibly Through the Referral String

Google's been slowly growing the percent of queries that contain the numeric position of the result in the referral data. Given how much this information means to marketers (even those who realize it's frequently not telling the whole story), and how much automated scraping/requests goes through each day, I'd venture to guess that Google will increase this further and maybe even add some support for it in GA (why force your engine to work harder and your impressions counts to suffer unnecessarily?)

#5: Mobile Will Have a Negligible Effect on Search/SEO

For years, I've heard the prognostication that SEO Services and search are going to be flipped on their heads once mobile query usage takes off. I'll boldly predict that not only will mobile usage of search NOT skyrocket in 2011 on the long-awaited J-curve, but that the mobile and normal web browsing experiences will continue to merge toward a single experience, thus negating much of the need for mobile-specific sites and SEO. They'll always be mobile-related marketing opportunities in games and local (though these are hardly limited to mobile devices), but mobile SEO will pretty much just be "SEO."

_

#6: Software Will Become an SEO Standard

For the decade I've been in SEO, software and tools have always been a "nice-to-have" and not a "must-have" (with the possible exception of web analytics). In 2011, I see several SEO software companies growing to critical mass based on the market's demand, possibly including: Raven Tools, Conductor's Searchlight, Brightedge, SearchMetrics, RankAbove, DIYSEO and/or GinzaMetrics. Hubspot, while more of a CMS/holistic marketing tool, will also likely fit in this group as their SEO offerings get stronger. Oh, and SEOmoz's Web App could do pretty well, too :-)

#7: We'll Start to Move Away from the Title "SEO" to Something More All-Inclusive

For years, I've prided myself being an SEO and embraced the title, the community, the positives and the negatives that come with it. But with the search engines expanding so far afield in the signals they consider and the verticals/media types they include, I have to face facts - SEO today calls for much more of a talented generalist than a pointed specialist. We need to be savvy about and good at so many facets of organic web marketing that to call us "SEOs" is less empowering and more limiting than in the past.

Now I'd love to hear some of your predictions for 2011 and see who's earned scores predicting 2010 that gives them the right to guess about 2011.

p.s. I didn't take the obvious "Google's going to crack down on more link spam" or "Social's going to be even more important" prediction gimmes, because I just don't think I'd respect myself tomorrow morning.